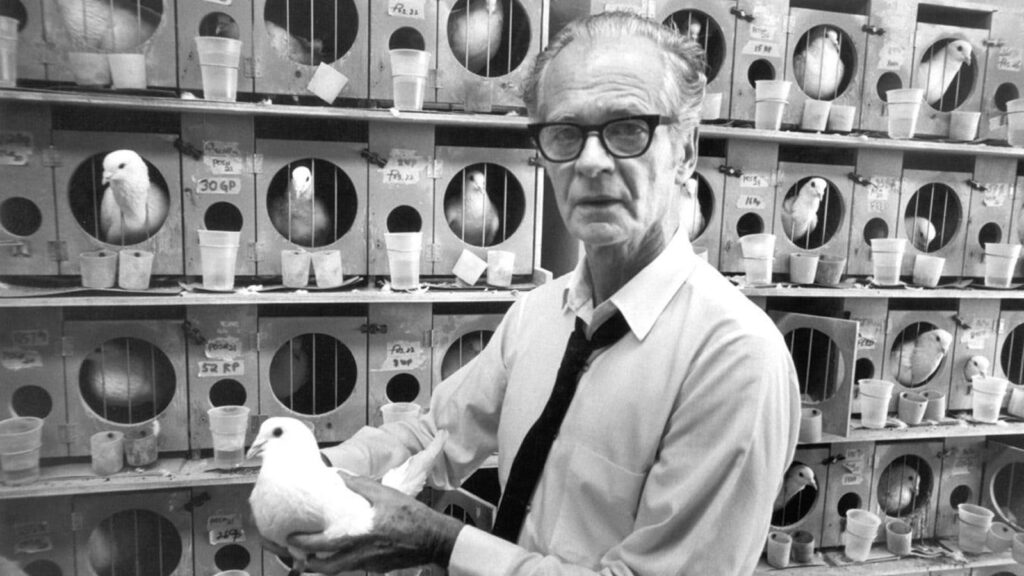

Practically a century in the past, psychologist B.F. Skinner pioneered a controversial faculty of thought, behaviorism, to clarify human and animal conduct. Behaviorism straight impressed fashionable reinforcement studying in AI, even giving the sphere its title.Supply: Ken Heyman

There aren’t many really new concepts in synthetic intelligence.

Extra typically, breakthroughs in AI occur when ideas which have existed for years abruptly tackle new energy as a result of underlying know-how inputs—specifically, uncooked computing energy—lastly catch as much as unlock these ideas’ full potential.

Famously, Geoff Hinton and a small group of collaborators devoted themselves tirelessly to neural networks beginning within the early Nineteen Seventies. For many years, the know-how didn’t actually work and the skin world paid little consideration. It was not till the early 2010s—due to the arrival of sufficiently highly effective Nvidia GPUs and internet-scale coaching knowledge—that the potential of neural networks was lastly unleashed for all to see. In 2024, greater than half a century after he started engaged on neural networks, Hinton was awarded the Nobel Prize for pioneering the sphere of contemporary AI.

Reinforcement studying has adopted an identical arc.

Richard Sutton and Andrew Barto, the fathers of contemporary reinforcement studying, laid down the foundations of the sphere beginning within the Nineteen Seventies. Even earlier than Sutton and Barto started their work, the essential ideas underlying reinforcement studying—in brief, studying by trial and error primarily based on constructive and destructive suggestions—had been developed by behavioral psychologists and animal researchers going again to the early twentieth century.

But in simply the previous 12 months, advances in reinforcement studying (RL) have taken on newfound significance and urgency on this planet of AI. It has turn out to be more and more clear that the following leap in AI capabilities might be pushed by RL. If synthetic common intelligence (AGI) is the truth is across the nook, reinforcement studying will play a central position in getting us there. Just some years in the past, when ChatGPT’s launch ushered within the period of generative AI, nearly nobody would have predicted this.

Deep questions stay unanswered about reinforcement studying’s capabilities and its limits. No subject in AI is transferring extra shortly at present than RL. It has by no means been extra essential to grasp this know-how, its historical past and its future.

Reinforcement Studying 101

The fundamental ideas of reinforcement studying have remained constant since Sutton and Barto established the sphere within the Nineteen Seventies.

The essence of RL is to be taught by interacting with the world and seeing what occurs. It’s a common and foundational type of studying; each human and animal does it.

Within the context of synthetic intelligence, a reinforcement studying system consists of an agent interacting with an surroundings. RL brokers aren’t given direct directions or solutions by people; as a substitute, they be taught by means of trial and error. When an agent takes an motion in an surroundings, it receives a reward sign from the surroundings, indicating that the motion produced both a constructive or a destructive end result. The agent’s objective is to regulate its conduct to maximise constructive rewards and decrease destructive rewards over time.

How does the agent resolve which actions to take? Each agent acts based on a coverage, which will be understood because the system or calculus that determines the agent’s motion primarily based on the actual state of the surroundings. A coverage generally is a easy algorithm, and even pure randomness, or it may be represented by a much more advanced system, like a deep neural community.

One remaining idea that’s essential to grasp in RL, carefully associated to the reward sign, is the worth perform. The worth perform is the agent’s estimate of how favorable a given state of the surroundings might be (that’s, what number of constructive and destructive rewards it should result in) over the long term. Whereas reward indicators are fast items of suggestions that come from the surroundings primarily based on present situations, the worth perform is the agent’s personal discovered estimate of how issues will play out in the long run.

The whole goal of worth features is to estimate reward indicators, however in contrast to reward indicators, worth features allow brokers to motive and plan over longer time horizons. For example, worth features can incentivize actions even after they result in destructive near-term rewards as a result of the long-term profit is estimated to be price it.

When RL brokers be taught, they accomplish that in considered one of 3 ways: by updating their coverage, updating their worth perform, or updating each collectively.

A short instance will assist make these ideas concrete.

Think about making use of reinforcement studying to the sport of chess. On this case, the agent is an AI chess participant. The surroundings is the chess board, with any given configuration of chess items representing a state of that surroundings. The agent’s coverage is the perform (whether or not a easy algorithm, or a choice tree, or a neural community, or one thing else) that determines which transfer to make primarily based on the present board state. The reward sign is straightforward: constructive when the agent wins a recreation, destructive when it loses a recreation. The agent’s worth perform is its discovered estimate of how favorable or unfavorable any given board place is—that’s, how seemingly the place is to result in a win or a loss.

Because the agent performs extra video games, methods that result in wins might be positively strengthened and methods that result in losses might be negatively strengthened by way of updates to the agent’s coverage and worth perform. Steadily, the AI system will turn out to be a stronger chess participant.

Within the twenty-first century, one group has championed and superior the sphere of reinforcement studying greater than every other: DeepMind.

Based in 2010 as a startup dedicated to fixing synthetic intelligence after which acquired by Google in 2014 for ~$600 million, London-based DeepMind made an enormous early guess on reinforcement studying as essentially the most promising path ahead in AI.

And the guess paid off.

The second half of the 2010s had been triumphant years for the sphere of reinforcement studying.

In 2016, DeepMind’s AlphaGo grew to become the primary AI system to defeat a human world champion on the historical Chinese language recreation of Go, a feat that many AI consultants had believed was not possible. In 2017, DeepMind debuted AlphaZero, which taught itself Go, chess and Japanese chess solely by way of self-play and bested each different AI and human competitor in these video games. And in 2019, DeepMind unveiled AlphaStar, which mastered the online game StarCraft—an much more advanced surroundings than Go given the huge motion house, imperfect data, quite a few brokers and real-time gameplay.

AlphaGo, AlphaZero, AlphaStar—reinforcement studying powered every of those landmark achievements.

Because the 2010s drew to a detailed, RL appeared poised to dominate the approaching technology of synthetic intelligence breakthroughs, with DeepMind main the way in which.

However that’s not what occurred.

Proper round that point, a brand new AI paradigm unexpectedly burst into the highlight: self-supervised studying for autoregressive language fashions.

In 2019, a small nonprofit analysis lab named OpenAI launched a mannequin named GPT-2 that demonstrated surprisingly highly effective general-purpose language capabilities. The next summer season, OpenAI debuted GPT-3, whose astonishing talents represented a large leap in efficiency from GPT-2 and took the AI world by storm. In 2022 got here ChatGPT.

In brief order, each AI group on this planet reoriented its analysis focus to prioritize giant language fashions and generative AI.

These giant language fashions (LLMs) had been primarily based on the transformer structure and made doable by a technique of aggressive scaling. They had been educated on unlabeled datasets that had been greater than any earlier AI coaching knowledge corpus—basically all the web—and had been scaled as much as unprecedented mannequin sizes. (GPT-2 was thought of mind-bogglingly giant at 1.5 billion parameters; one 12 months later, GPT-3 debuted at 175 billion parameters.)

Reinforcement studying fell out of style for half a decade. A broadly repeated narrative in the course of the early 2020s was that DeepMind had critically misinterpret know-how tendencies by committing itself to reinforcement studying and lacking the boat on generative AI.

But at present, reinforcement studying has reemerged as the most well liked subject inside AI. What occurred?

In brief, AI researchers found that making use of reinforcement studying to generative AI fashions was a killer mixture.

Beginning with a base LLM after which making use of reinforcement studying on high of it meant that, for the primary time, RL might natively function with the reward of language and broad information in regards to the world. Pretrained basis fashions represented a robust base on which RL might work its magic. The outcomes have been dazzling—and we’re simply getting began.

RL Meets LLMs

What does it imply, precisely, to mix reinforcement studying with giant language fashions?

A key perception to begin with is that the core ideas of RL will be mapped straight and elegantly to the world of LLMs.

On this mapping, the LLM itself is the agent. The surroundings is the total digital context wherein the LLM is working, together with the prompts it’s introduced with, its context window, and any instruments and exterior data it has entry to. The mannequin’s weights symbolize the coverage: they decide how the agent acts when introduced with any explicit state of the surroundings. Performing, on this context, means producing tokens.

What in regards to the reward sign and the worth perform? Defining a reward sign for LLMs is the place issues get fascinating and complex. It’s this subject, greater than every other, that can decide how far RL can take us on the trail to superintelligence.

The primary main software of RL to LLMs was reinforcement studying from human suggestions, or RLHF. The frontiers of AI analysis have since superior to extra cutting-edge strategies of mixing RL and LLMs, however RLHF represents an essential step on the journey, and it offers a concrete illustration of the idea of reward indicators for LLMs.

RLHF was invented by DeepMind and OpenAI researchers again in 2017. (As a aspect notice, given at present’s aggressive and closed analysis surroundings, it’s outstanding to do not forget that OpenAI and DeepMind used to conduct and publish foundational analysis collectively.) RLHF’s true coming-out get together, although, was ChatGPT.

When ChatGPT debuted in November 2022, the underlying AI mannequin on which it was primarily based was not new; it had already been publicly obtainable for a lot of months. The explanation that ChatGPT grew to become an in a single day success was that it was approachable, straightforward to speak to, useful, good at following instructions. The know-how that made this doable was RLHF.

In a nutshell, RLHF is a technique to adapt LLMs’ type and tone to be in line with human-expressed preferences, no matter these preferences could also be. RLHF is most frequently used to make LLMs “useful, innocent and sincere,” however it will possibly equally be used to make them extra flirtatious, or impolite, or sarcastic, or progressive, or conservative.

How does RLHF work?

The important thing ingredient in RLHF is “desire knowledge” generated by human topics. Particularly, people are requested to think about two responses from the mannequin for a given immediate and to pick out which one of many two responses they like.

This pairwise desire knowledge is used to coach a separate mannequin, often known as the reward mannequin, which learns to supply a numerical ranking of how fascinating or undesirable any given output from the principle mannequin is.

That is the place RL is available in. Now that we now have a reward sign, an RL algorithm can be utilized to fine-tune the principle mannequin—in different phrases, the RL agent—in order that it generates responses that maximize the reward mannequin’s scores. On this approach, the principle mannequin comes to include the type and values mirrored within the human-generated desire knowledge.

Circling again to reward indicators and LLMs: within the case of RLHF, as we now have seen, the reward sign comes straight from people and human-generated desire knowledge, which is then distilled right into a reward mannequin.

What if we need to use RL to provide LLMs highly effective new capabilities past merely adhering to human preferences?

The Subsequent Frontier

An important improvement in AI over the previous 12 months has been language fashions’ improved means to interact in reasoning.

What precisely does it imply for an AI mannequin to “motive”?

Not like first-generation LLMs, which reply to prompts utilizing next-token prediction with no planning or reflection, reasoning fashions spend time pondering earlier than producing a response. These fashions suppose by producing “chains of thought,” enabling them to systematically break down a given activity into smaller steps after which work by means of every step in an effort to arrive at a well-thought-through reply. In addition they know the way and when to make use of exterior instruments—like a calculator, a code interpreter or the web—to assist resolve issues.

The world’s first reasoning mannequin, OpenAI’s o1, debuted lower than a 12 months in the past. Just a few months later, China-based DeepSeek captured world headlines when it launched its personal reasoning mannequin, R1, that was close to parity with o1, totally open and educated utilizing far much less compute.

The key sauce that provides AI fashions the flexibility to motive is reinforcement studying—particularly, an method to RL often known as reinforcement studying from verifiable rewards (RLVR).

Like RLHF, RLVR entails taking a base mannequin and fine-tuning it utilizing RL. However the supply of the reward sign, and due to this fact the forms of new capabilities that the AI good points, are fairly totally different.

As its title suggests, RLVR improves AI fashions by coaching them on issues whose solutions will be objectively verified—mostly, math or coding duties.

First, a mannequin is introduced with such a activity—say, a difficult math downside—and prompted to generate a series of thought in an effort to resolve the issue.

The ultimate reply that the mannequin produces is then formally decided to be both appropriate or incorrect. (If it’s a math query, the ultimate reply will be run by means of a calculator or a extra advanced symbolic math engine; if it’s a coding activity, the mannequin’s code will be executed in a sandboxed surroundings.)

As a result of we now have a reward sign—constructive if the ultimate reply is appropriate, destructive whether it is incorrect—RL can be utilized to positively reinforce the forms of chains of thought that result in appropriate solutions and to discourage people who result in incorrect solutions.

The top result’s a mannequin that’s far more practical at reasoning: that’s, at precisely working by means of advanced multi-step issues and touchdown on the proper resolution.

This new technology of reasoning fashions has demonstrated astonishing capabilities in math competitions just like the Worldwide Math Olympiad and on logical exams just like the ARC-AGI benchmark.

So—is AGI proper across the nook?

Not essentially. Just a few big-picture questions on reinforcement studying and language fashions stay unanswered and loom giant. These questions encourage energetic debate and broadly various opinions on this planet of synthetic intelligence at present. Their solutions will decide how highly effective AI will get within the coming months.

A Few Large Unanswered Questions

How huge is the universe of duties that may be verified and due to this fact mastered by way of RL?

At present’s cutting-edge RL strategies depend on issues whose solutions will be objectively verified as both proper or incorrect. Unsurprisingly, then, RL has confirmed distinctive at producing AI techniques which are world-class at math, coding, logic puzzles and standardized exams. However what in regards to the many issues on this planet that don’t have simply verifiable solutions?

In a provocative essay titled “The Drawback With Reasoners”, Aidan McLaughlin elegantly articulates this level: “Keep in mind that reasoning fashions use RL, RL works greatest in domains with clear/frequent reward, and most domains lack clear/frequent reward.”

McLaughlin argues that the majority domains that people truly care about aren’t simply verifiable, and we are going to due to this fact have little success utilizing RL to make AI superhuman at them: for example, giving profession recommendation, managing a group, understanding social tendencies, writing authentic poetry, investing in startups.

Just a few counterarguments to this critique are price contemplating.

The primary facilities on the ideas of switch studying and generalizability. Switch studying is the concept that fashions educated in a single space can switch these learnings to enhance in different areas. Proponents of switch studying in RL argue that, even when reasoning fashions are educated solely on math and coding issues, this can endow them with broad-based reasoning abilities that can generalize past these domains and improve their means to deal with all types of cognitive duties.

“Studying to suppose in a structured approach, breaking matters down into smaller subtopics, understanding trigger and impact, tracing the connections between totally different concepts—these abilities ought to be broadly useful throughout downside areas,” mentioned Dhruv Batra, cofounder/chief scientist at Yutori and former senior AI researcher at Meta. “This isn’t so totally different from how we method training for people: we educate youngsters fundamental numeracy and literacy within the hopes of making a typically well-informed and well-reasoning inhabitants.”

Put extra strongly: for those who can resolve math, you may resolve something. Something that may be executed with a pc, in any case, finally boils all the way down to math.

It’s an intriguing speculation. However to this point, there isn’t a conclusive proof that RL endows LLMs with reasoning capabilities that generalize past simply verifiable domains like math and coding. It’s no coincidence that a very powerful advances in AI in current months—each from a analysis and a industrial perspective—have occurred in exactly these two fields.

If RL can solely give AI fashions superhuman powers in domains that may be simply verified, this represents a critical restrict to how far RL can advance the frontiers of AI’s capabilities. AI techniques that may write code or do arithmetic in addition to or higher than people are undoubtedly helpful. However true general-purpose intelligence consists of way more than this.

Allow us to think about one other counterpoint on this subject, although: what if verification techniques can the truth is be constructed for a lot of (and even all) domains, even when these domains aren’t as clearly deterministic and checkable as a math downside?

May it’s doable to develop a verification system that may reliably decide whether or not a novel, or a authorities coverage, or a chunk of profession recommendation, is “good” or “profitable” and due to this fact ought to be positively strengthened?

This line of pondering shortly leads us into borderline philosophical concerns.

In lots of fields, figuring out the “goodness” or “badness” of a given end result would appear to contain worth judgments which are irreducibly subjective, whether or not on moral or aesthetic grounds. For example, is it doable to find out that one public coverage end result (say, lowering the federal deficit) is objectively superior to a different (say, increasing a sure social welfare program)? Is it doable to objectively establish {that a} portray or a poem is or is just not “good”? What makes artwork “good”? Is magnificence not, in any case, within the eye of the beholder?

Sure domains merely don’t possess a “floor reality” to be taught from, however somewhat solely differing values and tradeoffs to be weighed.

Even in such domains, although, one other doable method exists. What if we might prepare an AI by way of many examples to instinctively establish “good” and “unhealthy” outcomes, even when we will’t formally outline them, after which have that AI function our verifier?

As Julien Launay, CEO/cofounder of RL startup Adaptive ML, put it: “In bridging the hole from verifiable to non-verifiable domains, we’re basically on the lookout for a compiler for pure language…however we have already got constructed this compiler: that’s what giant language fashions are.”

This method is also known as reinforcement studying from AI suggestions (RLAIF) or “LLM-as-a-Decide.” Some researchers consider it’s the key to creating verification doable throughout extra domains.

However it isn’t clear how far LLM-as-a-Decide can take us. The explanation that reinforcement studying from verifiable rewards has led to such incisive reasoning capabilities in LLMs within the first place is that it depends on formal verification strategies: appropriate and incorrect solutions exist to be found and discovered.

LLM-as-a-Decide appears to carry us again to a regime extra carefully resembling RLHF, whereby AI fashions will be fine-tuned to internalize no matter preferences and worth judgments are contained within the coaching knowledge, arbitrary although they might be. This merely punts the issue of verifying subjective domains to the coaching knowledge, the place it could stay as unsolvable as ever.

We are able to say this a lot for positive: to this point, neither OpenAI nor Anthropic nor every other frontier lab has debuted an RL-based system with superhuman capabilities in writing novels, or advising governments, or beginning firms, or every other exercise that lacks apparent verifiability.

This doesn’t imply that the frontier labs do not make progress on the issue. Certainly, simply final month, main OpenAI researcher Noam Brown shared on X: “We developed new methods that make LLMs loads higher at hard-to-verify duties.”

Rumors have even begun to flow into that OpenAI has developed a so-called “common verifier,” which might present an correct reward sign in any area. It’s arduous to think about how such a common verifier would work; no concrete particulars have been shared publicly.

Time will inform how highly effective these new methods are.

What is going to occur after we massively scale RL?

You will need to do not forget that we’re nonetheless within the earliest innings of the reinforcement studying period in generative AI.

We have now simply begun to scale RL. The whole quantity of compute and coaching knowledge dedicated to reinforcement studying stays modest in comparison with the extent of sources spent on pretraining basis fashions.

This chart from a current OpenAI presentation speaks volumes:With regards to scaling RL, we’re simply getting began.Supply: OpenAI

At this very second, AI organizations are getting ready to deploy huge sums to scale up their reinforcement studying efforts as shortly as they will. Because the chart above depicts, RL is about to transition from a comparatively minor element of AI coaching budgets to the principle focus.

What goes into scaling RL?

“Maybe a very powerful ingredient when scaling RL is the environments—in different phrases, the settings wherein you unleash the AI to discover and be taught,” mentioned Stanford AI researcher Andy Zhang. “Along with sheer amount of environments, we want higher-quality environments, particularly as mannequin capabilities enhance. This can require considerate design and implementation of environments to make sure range and goldilocks issue and to keep away from reward hacking and damaged duties.”

When xAI debuted its new frontier mannequin Grok 4 final month, it introduced that it had devoted “over an order of magnitude extra compute” to reinforcement studying than it had with earlier fashions.

We have now many extra orders of magnitude to go.

At present’s RL-powered fashions, whereas highly effective, face shortcomings. The unsolved problem of difficult-to-verify domains, mentioned above, is one. One other critique is named elicitation: the speculation that reinforcement studying doesn’t truly endow AI fashions with larger intelligence however somewhat simply elicits capabilities that the bottom mannequin already possessed. Yet one more impediment that RL faces is its inherent pattern inefficiency in comparison with different AI paradigms: RL brokers should do an amazing quantity of labor to obtain a single little bit of suggestions. This “reward sparsity” has made RL impracticable to deploy in lots of contexts.

It’s doable that scale might be a tidal wave that washes all of those issues away.

If there’s one precept that has outlined frontier AI lately, in any case, it’s this: nothing issues greater than scale.

When OpenAI scaled from GPT-2 to GPT-3 to GPT-4 between 2019 and 2023, the fashions’ efficiency good points and emergent capabilities had been astonishing, far exceeding the neighborhood’s expectations.

At each step, skeptics recognized shortcomings and failure modes with these fashions, claiming that they revealed basic weaknesses within the know-how paradigm and predicting that progress would quickly hit a wall. As a substitute, the following technology of fashions would blow previous these shortcomings, advancing the frontier by leaps and bounds and demonstrating new capabilities that critics had beforehand argued had been not possible.

The world’s main AI gamers are betting {that a} related sample will play out with reinforcement studying.

If current historical past is any information, it’s a good guess to make.

However it is very important do not forget that AI “scaling legal guidelines”—which predict that AI efficiency will increase as knowledge, compute and mannequin dimension improve—aren’t truly legal guidelines in any sense of that phrase. They’re empirical observations that for a time proved dependable and predictive for pretraining language fashions and which were preliminarily demonstrated in different knowledge modalities.

There is no such thing as a formal assure that scaling legal guidelines will at all times maintain in AI, nor how lengthy they’ll final, nor how steep their slope might be.

The reality is that nobody is aware of for positive what is going to occur after we massively scale up RL. However we’re all about to search out out.

As RL quickly takes over the world of AI, what are essentially the most compelling startup alternatives?

Keep tuned for our follow-up article on this subject—or be happy to achieve out straight to debate!

Trying Ahead

Reinforcement studying represents a compelling method to constructing machine intelligence for one profound motive: it isn’t sure by human competence or creativeness.

Coaching an AI mannequin on huge troves of labeled knowledge (supervised studying) will make the mannequin distinctive at understanding these labels, however its information might be restricted to the annotated knowledge that people have ready. Coaching an AI mannequin on all the web (self-supervised studying) will make the mannequin distinctive at understanding the totality of humanity’s present information, however it isn’t clear that this can allow it to generate novel insights that transcend what people have already put forth.

Reinforcement studying faces no such ceiling. It doesn’t take its cues from present human knowledge. An RL agent learns for itself, from first ideas, by means of first-hand expertise.

AlphaGo’s “Transfer 37” serves because the archetypal instance right here. In considered one of its matches towards human world champion Lee Sedol, AlphaGo performed a transfer that violated hundreds of years of amassed human knowledge about Go technique. Most observers assumed it was a miscue. As a substitute, Transfer 37 proved to be an excellent play that gave AlphaGo a decisive benefit over Sedol. The transfer taught humanity one thing new in regards to the recreation of Go. It has eternally modified the way in which that human consultants play the sport.

The last word promise of synthetic intelligence is just not merely to copy human intelligence. Moderately, it’s to unlock new types of intelligence which are radically totally different from our personal—types of intelligence that may give you concepts that we by no means would have give you, make discoveries that we by no means would have made, assist us see the world in beforehand unimaginable methods.

We have now but to see a “Transfer 37 second” on this planet of generative AI. It might be a matter of weeks or months—or it could by no means occur. Watch this house.