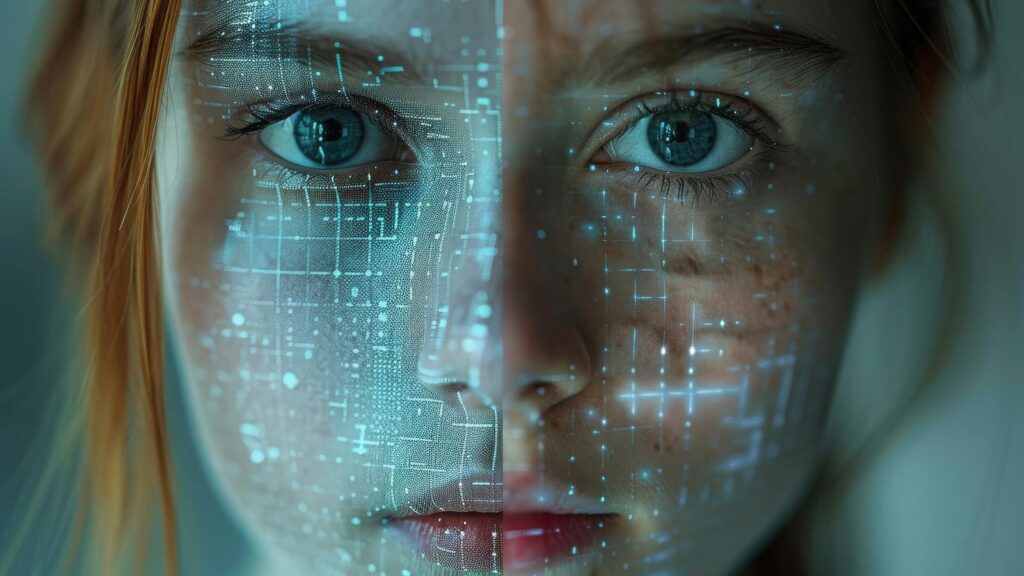

AI nudification apps are making it frighteningly simple to create faux sexualized photographs of girls and … Extra teenagers, sparking a surge in abuse, blackmail and on-line exploitation.Adobe Inventory

The rise of AI “nudification” instruments makes it shockingly simple for anybody to create a faux bare picture of you—or any of your loved ones, pals or colleagues—utilizing nothing greater than a photograph and one in all many available AI apps.

The existence of instruments that permit customers create non-consensual sexualized photographs would possibly appear to be an inevitable consequence of the event of AI picture technology. However with 15 million downloads since 2022, and deepfaked nude content material more and more used to bully victims and expose them to hazard, it’s not an issue that society can or ought to ignore.

There have been requires apps to be banned, and prison penalties for creating and spreading non-consensual intimate photographs have been launched in some nations. However this has performed little to stem the flood, with one in 4 13 to 19-year-olds reportedly uncovered to faux, sexualized photographs of somebody they know.

Let’s take a look at how these instruments work, what the actual dangers are, and what steps we must be taking to reduce the harms which might be already being induced.

What Are Nudification Apps And What Are The Risks?

Nudification apps use AI to create bare or sexualized photographs of individuals from the type of on a regular basis, fully-clothed photographs that anybody would possibly add to Fb, Instagram or LinkedIn.

Whereas males are sometimes the targets, analysis means that 99 per cent of non-consensual, sexualized deepfakes characteristic girls and ladies. Overwhelmingly, it’s used as a type of abuse to bully, coerce or extort victims. Media protection steadily means that that is more and more having an actual influence on girls’s lives.

Whereas faked nude photographs might be humiliating and probably career-affecting for anybody, in some components of the world, it might go away girls liable to prison prosecution and even severe violence.

One other stunning issue is the rising variety of faux photographs of minors which might be being created, which can or will not be derived from photographs of actual youngsters.

The Web Watch Basis reported a 400 p.c rise within the variety of URLs internet hosting AI-generated youngster intercourse abuse content material within the first six months of 2025. This kind of content material is seen as notably harmful, even when no actual youngsters are concerned, with consultants saying it may well normalize abusive photographs, gasoline demand, and complicate legislation enforcement investigations.

Sadly, media stories recommend that criminals have a transparent monetary incentive to become involved, with some making tens of millions of {dollars} from promoting faux content material.

So, given the simplicity and scale with which these photographs might be created, and the devastating penalties they’ll have on lives, what’s being performed to cease it?

How Are Service Suppliers And Legislators Reacting?

Efforts to deal with the difficulty via regulation are underway in lots of jurisdictions, however thus far, progress has been uneven.

Within the US, the Take It Down Act makes on-line companies, together with social media, chargeable for taking down non-consensual deepfakes when requested to take action. And a few states, together with California and Minnesota, have handed legal guidelines making it unlawful to distribute sexually specific deepfakes.

Within the UK, there are proposals to take issues additional by imposing penalties for making, not merely distributing, non-consensual deepfakes, in addition to an outright ban on nudification apps themselves. Nevertheless, it isn’t clear how the instruments could be outlined and differentiated from AI used for reputable inventive functions.

China’s generative AI measures comprise a number of provisions aimed toward mitigating the hurt of non-consensual deepfakes. Amongst these are necessities that instruments ought to have built-in safeguards to detect and block unlawful use, and that AI content material must be watermarked in a manner that permits its origin to be traced.

One frustration for these campaigning for an answer is that authorities haven’t all the time appeared keen to deal with AI-generated picture abuse as significantly as they might photographic picture abuse, because of a notion that it “isn’t actual”.

In Australia, this prompted the federal government commissioner for on-line security to name on faculties to make sure all incidents are reported to police as intercourse crimes in opposition to youngsters.

In fact, on-line service suppliers have a vastly vital function to play, too. Simply this month, Meta introduced that it’s suing the makers of the CrushAI app for trying to avoid its restrictions on selling nudification apps on its Fb platform.

This got here after on-line investigators discovered that the makers of those apps are steadily in a position to evade measures put in place by service suppliers to restrict their attain.

What Can The Relaxation Of Us Do?

The rise of AI nudification apps ought to act as a warning that transformative applied sciences like AI can change society in ways in which aren’t all the time welcome.

However we also needs to do not forget that the post-truth age and “the top of privateness” are simply attainable futures, not assured outcomes.

How the long run seems will rely on what we resolve is suitable or unacceptable now, and the actions we take to uphold these selections.

From a societal perspective, this implies schooling. Critically, there must be a deal with the habits and attitudes of school-age youngsters to assist make them conscious of the hurt that may be induced.

From a enterprise perspective, it means growing an consciousness of how this know-how can influence employees, notably girls. HR insurance policies ought to guarantee there are techniques and insurance policies in place to assist those that could turn out to be victims of blackmail or harassment campaigns involving deepfaked photographs or movies.

And technological options have a task to play in detecting when these photographs are transferred and uploaded, and probably eradicating them earlier than they’ll trigger hurt. Watermarking, filtering and collaborative neighborhood moderation might all be a part of the answer.

Failing to behave decisively now will imply that deepfakes, nude or in any other case, are more likely to turn out to be an more and more problematic a part of on a regular basis life.