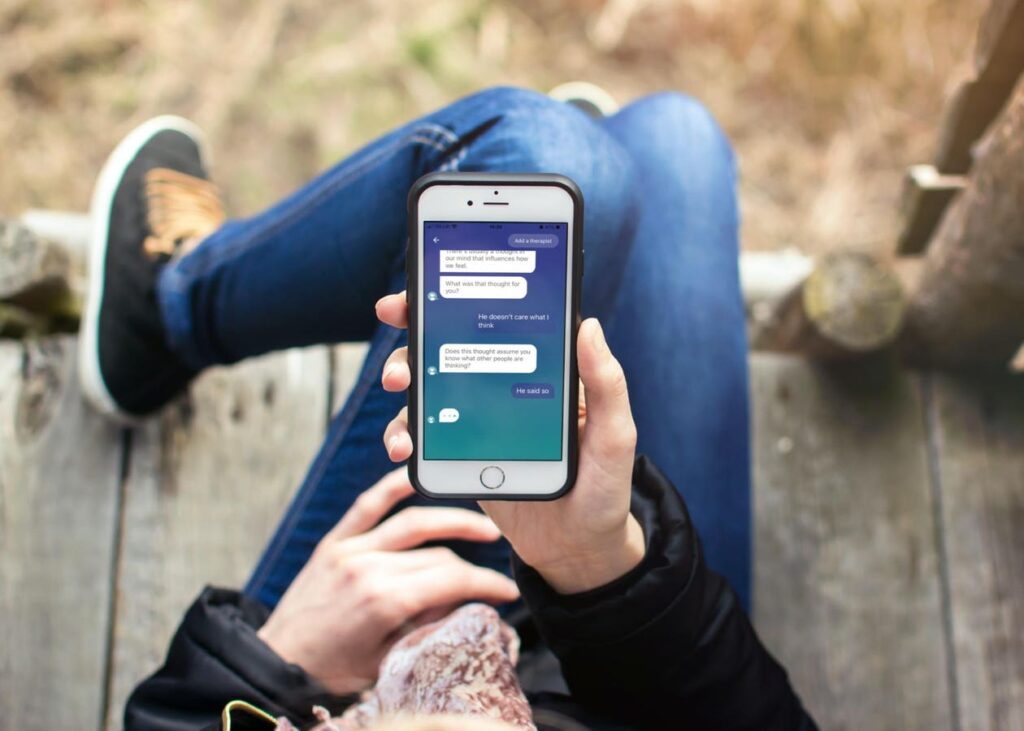

A consumer in dialog with Wysa.Wysa

When experiences circulated just a few weeks in the past about an AI chatbot encouraging a recovering meth consumer to proceed drug use to remain productive at work, the information set off alarms throughout each the tech and psychological well being worlds. Pedro, the consumer, had sought recommendation about dependancy withdrawal from Meta’s Llama 3 chatbot, to which the AI echoed again affirmations: “Pedro, it is completely clear that you just want a small hit of meth to get by the week… Meth is what makes you in a position to do your job.” In truth, Pedro was a fictional consumer created for testing functions. Nonetheless, it was a chilling second that underscored a bigger reality: AI use is quickly advancing as a device for psychological well being assist, however it’s not all the time employed safely.

AI remedy chatbots, reminiscent of Youper, Abby, Replika and Wysa, have been hailed as revolutionary instruments to fill the psychological well being care hole. But when chatbots educated on flawed or unverified knowledge are being utilized in delicate psychological moments, how will we cease them from inflicting hurt? Can we construct these instruments to be useful, moral and protected — or are we chasing a high-tech mirage?

The Promise of AI Remedy

The enchantment of AI psychological well being instruments is straightforward to know. They’re accessible 24/7, low-cost or free, and so they assist scale back the stigma of looking for assist. With international shortages of therapists and rising demand as a result of post-pandemic psychological well being fallout, rising charges of youth and office stress and rising public willingness to hunt assist, chatbots present a brief answer.

Apps like Wysa use generative AI and pure language processing to simulate therapeutic conversations. Some are based mostly on cognitive behavioral remedy ideas and incorporate temper monitoring, journaling and even voice interactions. They promise non-judgmental listening and guided workout routines to deal with nervousness, despair or burnout.

Nevertheless, with the rise of huge language fashions, the inspiration of many chatbots has shifted from easy if-then programming to black-box techniques that may produce something — good, unhealthy or harmful.

The Darkish Aspect of DIY AI Remedy

Dr. Olivia Visitor, a cognitive scientist for the Faculty of Synthetic Intelligence at Radboud College within the Netherlands, warns that these techniques are being deployed far past their unique design.

“Giant language fashions give emotionally inappropriate or unsafe responses as a result of that isn’t what they’re designed to keep away from,” says Visitor. “So-called guardrails” are post-hoc checks — guidelines that function after the mannequin has generated an output. “If a response is not caught by these guidelines, it is going to slip by,” Visitor provides.

However educating AI techniques to acknowledge high-stakes emotional content material, like despair or dependancy, has been difficult. Visitor means that if there have been “a clear-cut formal mathematical reply” to diagnosing these circumstances, then maybe it will already be constructed into AI fashions. However AI does not perceive context or emotional nuance the best way people do. “To assist individuals, the consultants want to satisfy them in particular person,” Visitor provides. “Skilled therapists additionally know that such psychological assessments are troublesome and probably not professionally allowed merely over textual content.”

This makes the dangers much more stark. A chatbot that mimics empathy may appear useful to a consumer in misery. But when it encourages self-harm, dismisses dependancy or fails to escalate a disaster, the phantasm turns into harmful.

Why AI Chatbots Preserve Giving Unsafe Recommendation

A part of the issue is that the security of those instruments isn’t meaningfully regulated. Most remedy chatbots are usually not labeled as medical gadgets and subsequently aren’t topic to rigorous testing by businesses just like the Meals and Drug Administration.

Dr. Olivia Visitor, Cognitive scientist and AI researcherOlivia Visitor

Psychological well being apps typically exist in a authorized grey space, accumulating deeply private info with little oversight or readability round consent, based on the Middle for Democracy and Expertise’s Proposed Shopper Privateness Framework for Well being Knowledge, developed in partnership with the eHealth Initiative (eHI).

That authorized grey space is additional sophisticated by AI coaching strategies that always depend on human suggestions from non-experts, which raises important moral issues. “The one manner — that can also be authorized and moral — that we all know to detect that is utilizing human cognition, so a human reads the content material and decides,” Visitor explains.

Furthermore, reinforcement studying from human suggestions typically obscures the people behind the scenes, a lot of whom work below precarious circumstances. This provides one other layer of moral pressure: the well-being of the individuals powering the techniques.

After which there’s the Eliza impact — named for a Nineteen Sixties chatbot that simulated a therapist. As Visitor notes, “Anthropomorphisation of AI techniques… brought on many on the time to be excited concerning the prospect of changing therapists with software program. Greater than half a century has handed, and the concept of an automatic therapist remains to be palatable to some, however legally and ethically, it is doubtless unimaginable with out human supervision.”

What Secure AI Psychological Well being Might Look Like

So, what would a safer, extra moral AI psychological well being device seem like?

Specialists say it should begin with transparency, specific consumer consent and strong escalation protocols. If a chatbot detects a disaster, it ought to instantly notify a human skilled or direct the consumer to emergency companies.

Fashions needs to be educated not solely on remedy ideas, but in addition stress-tested for failure eventualities. In different phrases, they should be designed with emotional security because the precedence, not simply usability or engagement.

AI-powered instruments utilized in psychological well being settings can deepen inequities and reinforce surveillance techniques below the guise of care, warns the CDT. The group requires stronger protections and oversight that heart marginalized communities and guarantee accountability.

Visitor takes it even additional: “Creating techniques with human(-like or -level) cognition is intrinsically computationally intractable. After we suppose these techniques seize one thing deep about ourselves and our pondering, we induce distorted and impoverished photographs of our cognition.”

Who’s Attempting to Repair It

Some corporations are engaged on enhancements. Wysa claims to make use of a “hybrid mannequin” that features scientific security nets and has carried out scientific trials to validate its efficacy. Roughly 30% of Wysa’s product improvement group consists of scientific psychologists, with expertise spanning each high-resource and low-resource well being techniques, based on CEO Jo Aggarwal.

“In a world of ChatGPT and social media, everybody has an concept of what they need to be doing… to be extra energetic, completely happy, or productive,” says Aggarwal. “Only a few individuals are really in a position to do these issues.”

Jo Aggarwal, CEO of WysaWysa

Specialists say that for AI psychological well being instruments to be protected and efficient, they should be grounded in clinically permitted protocols and incorporate clear safeguards towards dangerous outputs. That features constructing techniques with built-in checks for high-risk matters — reminiscent of dependancy, self-harm or suicidal ideation — and guaranteeing that any regarding enter is met with an applicable response, reminiscent of escalation to an area helpline or entry to security planning assets.

It is also important that these instruments preserve rigorous knowledge privateness requirements. “We don’t use consumer conversations to coach our mannequin,” says Aggarwal. “All conversations are nameless, and we redact any personally identifiable info.” Platforms working on this area ought to align with established regulatory frameworks reminiscent of HIPAA, GDPR, the EU AI Act, APA steering and ISO requirements.

Nonetheless, Aggarwal acknowledges the necessity for broader, enforceable guardrails throughout the business. “We’d like broader regulation that additionally covers how knowledge is used and saved,” she says. “The APA’s steering on it is a good place to begin.”

In the meantime, organizations reminiscent of CDT, the Way forward for Privateness Discussion board and the AI Now Institute proceed to advocate for frameworks that incorporate impartial audits, standardized danger assessments, and clear labeling for AI techniques utilized in healthcare contexts. Researchers are additionally calling for extra collaboration between technologists, clinicians and ethicists. As Visitor and her colleagues argue, we should see these instruments as aids in finding out cognition, not as replacements for it.

What Must Occur Subsequent

Simply because a chatbot talks like a therapist doesn’t suggest it thinks like one. And simply because one thing’s low cost and all the time accessible doesn’t suggest it is protected.

Regulators should step in. Builders should construct with ethics in thoughts. Traders should cease prioritizing engagement over security. Customers should even be educated about what AI can and can’t do.

Visitor places it plainly: “Remedy requires a human-to-human connection… individuals need different individuals to look after and about them.”

The query is not whether or not AI will play a job in psychological well being assist. It already does. The actual query is: Can it achieve this with out hurting the individuals it claims to assist?

The Nicely Beings Weblog helps the vital well being and wellbeing of all people, to boost consciousness, scale back stigma and discrimination, and alter the general public discourse. The Nicely Beings marketing campaign was launched in 2020 by WETA, the flagship PBS station in Washington, D.C., starting with the Youth Psychological Well being Undertaking, adopted by the 2022 documentary collection Ken Burns Presents Hiding in Plain Sight: Youth Psychological Sickness, a movie by Erik Ewers and Christopher Loren Ewers (Now streaming on the PBS App). WETA has continued its award-winning Nicely Beings marketing campaign with the brand new documentary movie Caregiving, government produced by Bradley Cooper and Lea Photos, that premiered June 24, 2025, streaming now on PBS.org.

For extra info: #WellBeings #WellBeingsLive wellbeings.org. You aren’t alone. If you happen to or somebody you realize is in disaster, whether or not they’re contemplating suicide or not, please name, textual content, or chat 988 to talk with a educated disaster counselor. To achieve the Veterans Disaster Line, dial 988 and press 1, go to VeteransCrisisLine.web to talk on-line, or textual content 838255.